How Solid keeps a close eye on its AI, with LangSmith

Last week, we shared details of our move to an Agentic Platform. Today, we're sharing how we're using LangSmith to continuously improve its performance via deep observability.

Following our post detailing our migration to an agentic platform, we would now like to share how we use LangSmith to continuously improve the performance of our engine.

When we say performance - it’s not just about speed, or cost. It’s also, and more importantly, about accuracy and trust. As we’ve seen in the market, people are quick to lose trust in an AI-based solution (if it gets things wrong, hallucinates, or just isn’t helpful).

So, the number one focus for us is the reliability of AI engine.

Before the move to the agentic platform, we used a comprehensive evals solution, that mostly treated the engine as a black-box that it could provide input into, and evaluate its output. Now, we can actually open up that box, and peer inside.

The depth is incredible

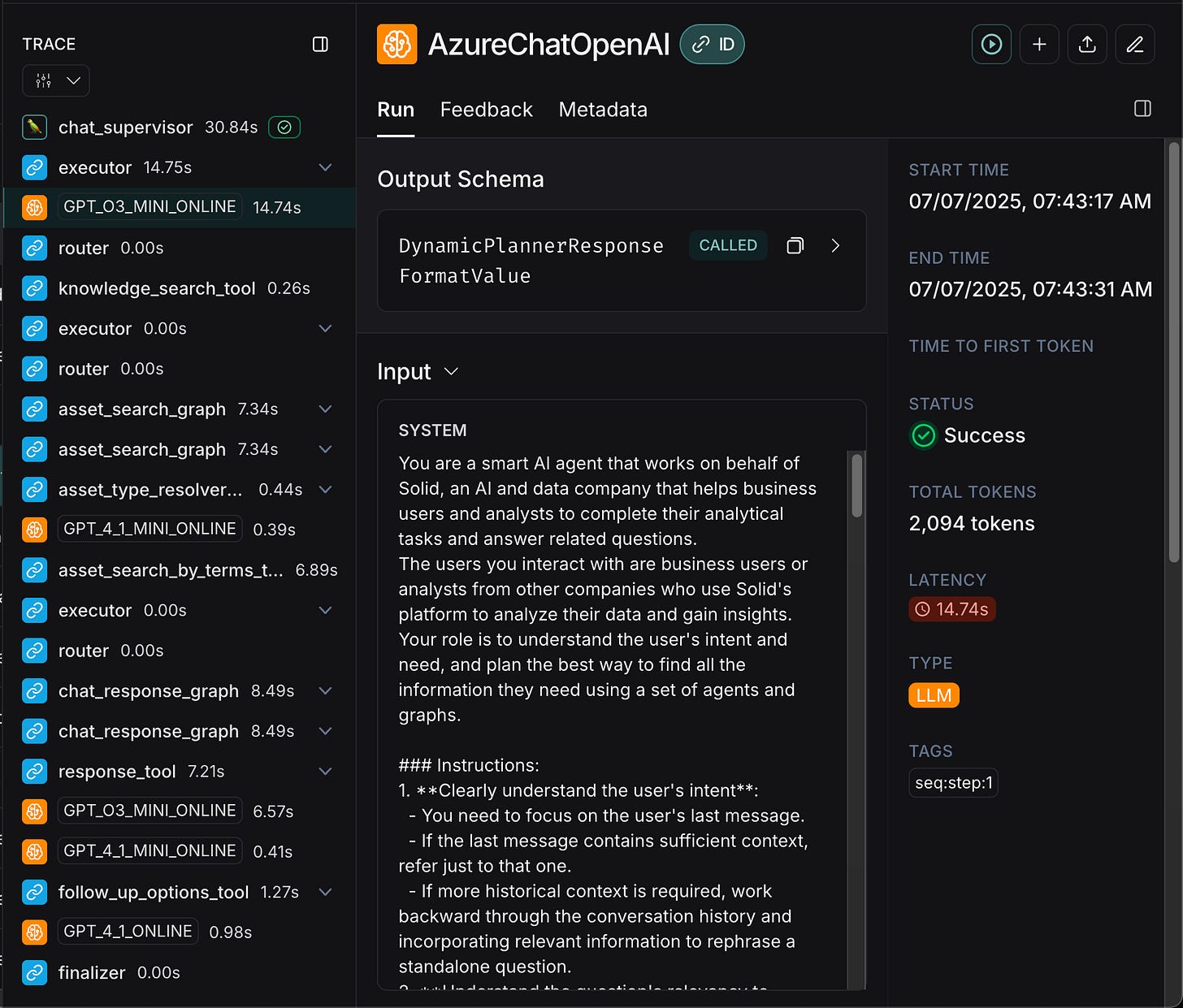

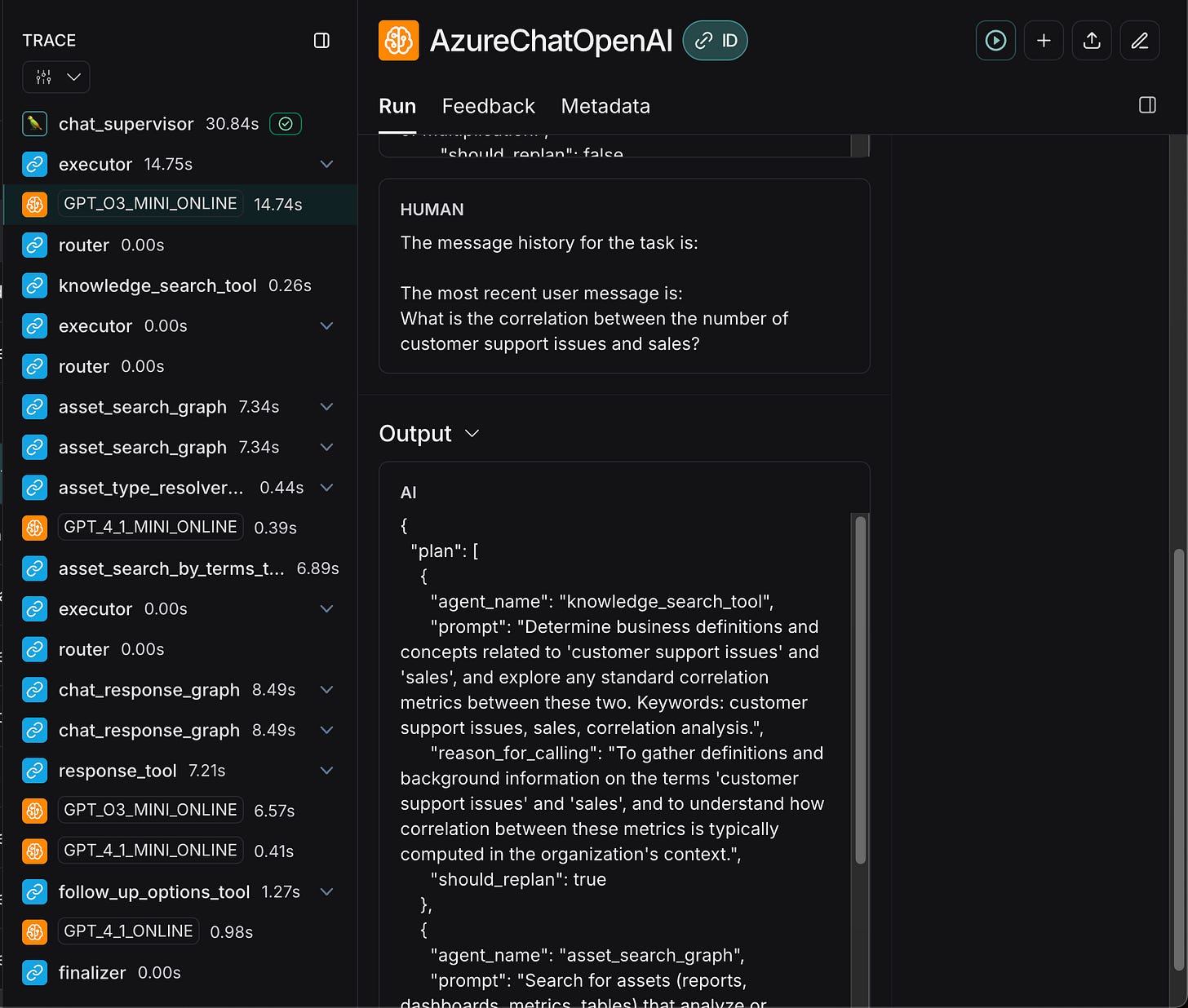

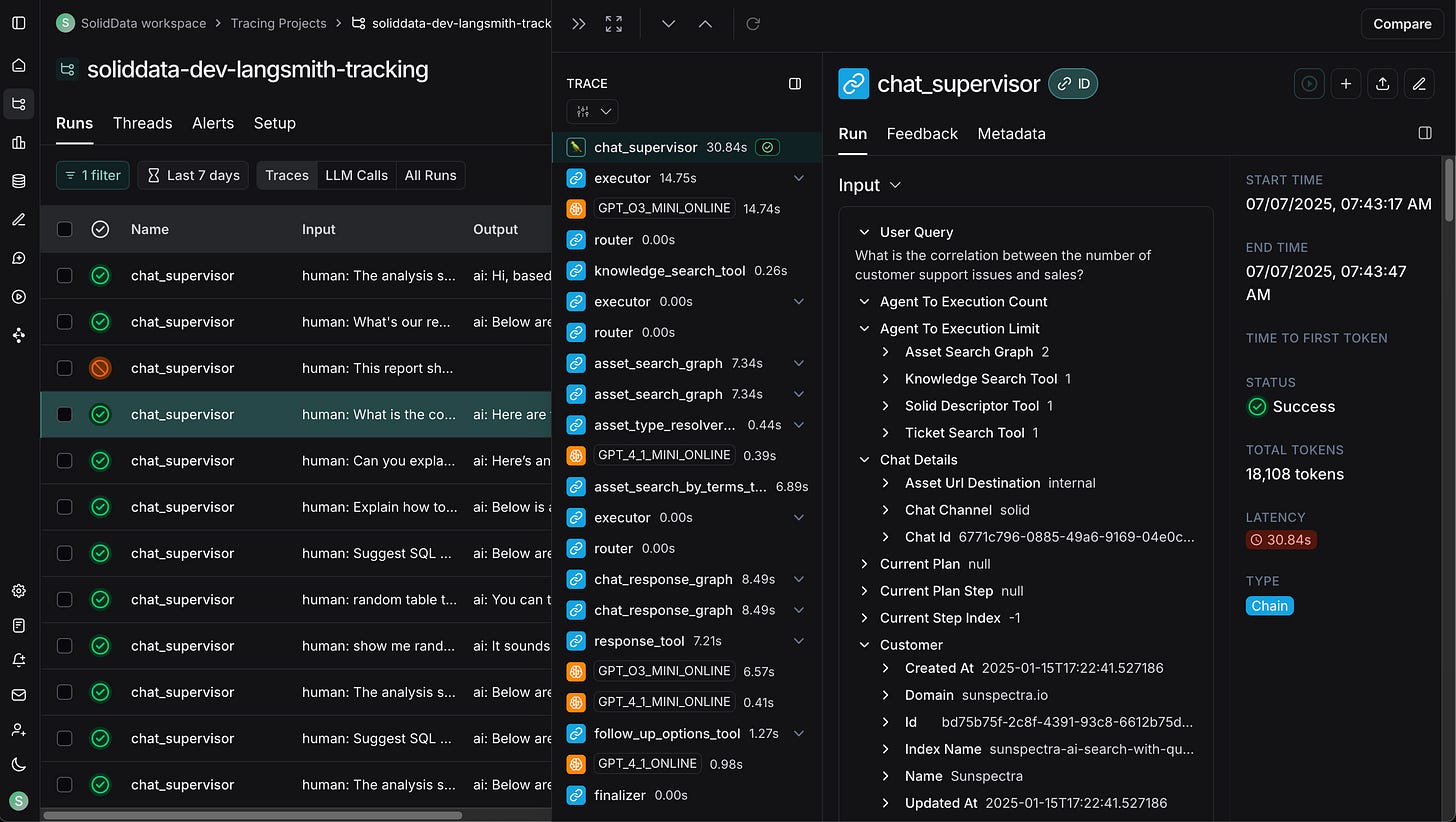

As you can see above, we can observe each and every step, of every single interaction. As you may recall from our overview post, there’s a supervisor, which comes up with a plan of action, and a set of tools it uses. It can revise its plan as it progresses, and call the tools again and again.

In the example above, there’s a simple user query (“What is the correlation between the number of customer support issues and sales?”), and there’s a multi-step process taken by the platform.

The supervisor oversees it all, and starts with a planner. Here, we use ChatGPT’s o3-mini model, to come up with a plan of action. The plan is in the form of a JSON that contains each tool it wants to use, and what is the input (prompt) for that tool.

The router handles the plan execution, making sure each tool is executed as planned. It can hand the process back to the planner if needed, too. Notice we use different LLM models where needed, as mentioned in our last post, in order to balance quality of output with cost/time.

As the execution progresses, a state is carried from step to step. This way, each tool is aware of what was collected so far, and what we’re trying to achieve. It makes the tool more powerful in its ability to provide a highly relevant and accurate response.

In LangSmith, we can see what each tool does, its input and output, and how they work in sequence. This allows us to troubleshoot issues - especially when the chat’s eventual response is not what we were hoping for it to be.

The day-to-day

Naturally, we don’t sit all day, watching every single run within LangSmith, and going over every single step. That doesn’t work at the scale we need it to.

Instead, we rely on multiple mechanisms, including user feedback (thumbs up/down etc.), to identify which AI interactions warrant deeper investigation. It could be due to a response not being as good as we wanted it to be, or slower than expected. Maybe, it was a really good response, and we want to understand what the engine did to achieve it.

And so, every week, our team manually reviews dozens of interactions that were flagged (manually and automatically), and inspects the behavior of the engine. Where we see places for improvement, JIRA tickets are opened and followed through. Obviously, there are security policies and controls in place to ensure this is done in line with our commitments to our customers. These are reviewed regularly by our auditor, too.

It’s very, very, different to normal software development. This level of dynamic behavior of a system is something no one has seen before 2023, and now is the norm. It really feels like we’re writing the playbook while running it, and the rest of the market is as well.

Next steps

We are expanding our usage of LangSmith, currently in their cloud offering, to help our engine get better and better over time. We’re also transitioning our evals infrastructure to leverage LangSmith - more on that in a future blog post.

We’re quite open in what we’re building and how, so if you ever want to learn more about how the Solid platform works, and how it helps people all over the world get faster and better access to data and insights, hit us up. If you just need a detailed documentation and semantic model of your data stack, we can do that for free.