The curse, and promise, of the white box

Those who were born before 1990, probably remember the first moment they saw the Google Search box. The paradigm shift is upon us again.

Back in the late 1990’s Google launched, and some of us began discovering it. I still remember the first time I saw the Google search engine. It was a weird experience - I didn’t know what to write in that white input box.

What can this thing do for me? What should I write here? What should I expect?

Since then, I’ve observed that experience with other users of Google, such as my mother. The white box is so clean and simple, but also lacking any information on what the user should do with it.

It took the world a while to understand what they can possibly do with Google’s white box. Over time, we all got pretty good at using it the right way - using keywords, not full sentences, relying on its suggestions and so forth.

But it didn’t start that way. Danny Sullivan, the founder of Search Engine Watch, said in 2000: "Google may not be good on a particular query. If you wanted to find multimedia or audio-visual files, Google wouldn't be helpful at all."

You see… Google wasn’t perfect straight out the gate. So there was a two-sided education here. On the one hand, we, the users, learned to use this white box. On the other hand, the white box (and the developers behind it), were studying us. They were trying to understand what we think it can do for us, and they continuously updated it to give us what we’re looking for.

History does rhyme

With the explosion in AI, it seems like we’re bound to experience this white-box curse again. Personally, I use dozens of different AI-based tools regularly. I noticed that every time I sign up for a new tool, I wonder to myself - how should I best use this tool? What should I write in this box? (most recently this happened with Bolt)

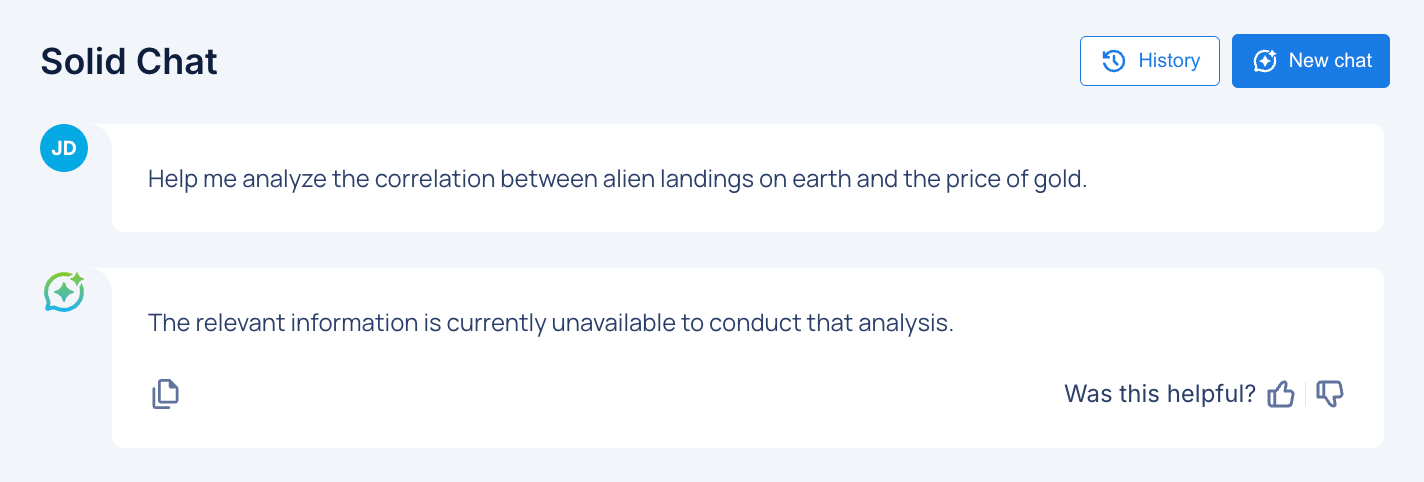

At Solid, we’ve been watching what users are inputting into the white box we provide in our chat capability, and boy is it interesting!

You see, you can put suggestions below the chat box (as we have), but that’s not quite the solution. On the one hand, it’s useful, though many people ignore it… and on the other hand, one could argue that those suggestions are limiting people’s imagination. You want your users to imagine, so you can truly meet their needs.

Instead, we would prefer for people to ask for what they think this product can help them answer. Hopefully - it can answer. Sometimes, it can’t. Our research team (led by the bright Maya Bercovitch whose recent post on this subject was read by hundreds of people) keeps reviewing the types of things people write into the chat and improving the platform’s response. Such as:

4% of the time, people say “please” and “thank you” to the AI. (personally, I think that’s a good idea, for when they rule us all…)

42% of the time, people are trying to find the right data asset to use based on a natural query (which is great, considering it’s one of our main use cases)

13% of the time people need help validating that a specific asset is of good enough quality to use for a specific case (also one of our main use cases)

It’s an amazing process - you see certain chat requests that our chat engine can’t answer, but over time it gets better. Then, next time a user asks for something similar in concept, the chat can respond.

At the same time, you’re always looking to ensure it doesn’t make stuff up, or hallucinate. AI loves to please us, and Maya’s team spends an immense amount of time “keeping it in its lane” and making sure it “behaves”.

Ohhh… what at time to be building a software product!