GenAI is consistently inconsistent, but is that a problem?

Until GenAI came around, we were all used to determinism in our interaction with computers. Same input, same output. Not with GenAI. Those building apps around GenAI need to deal with it.

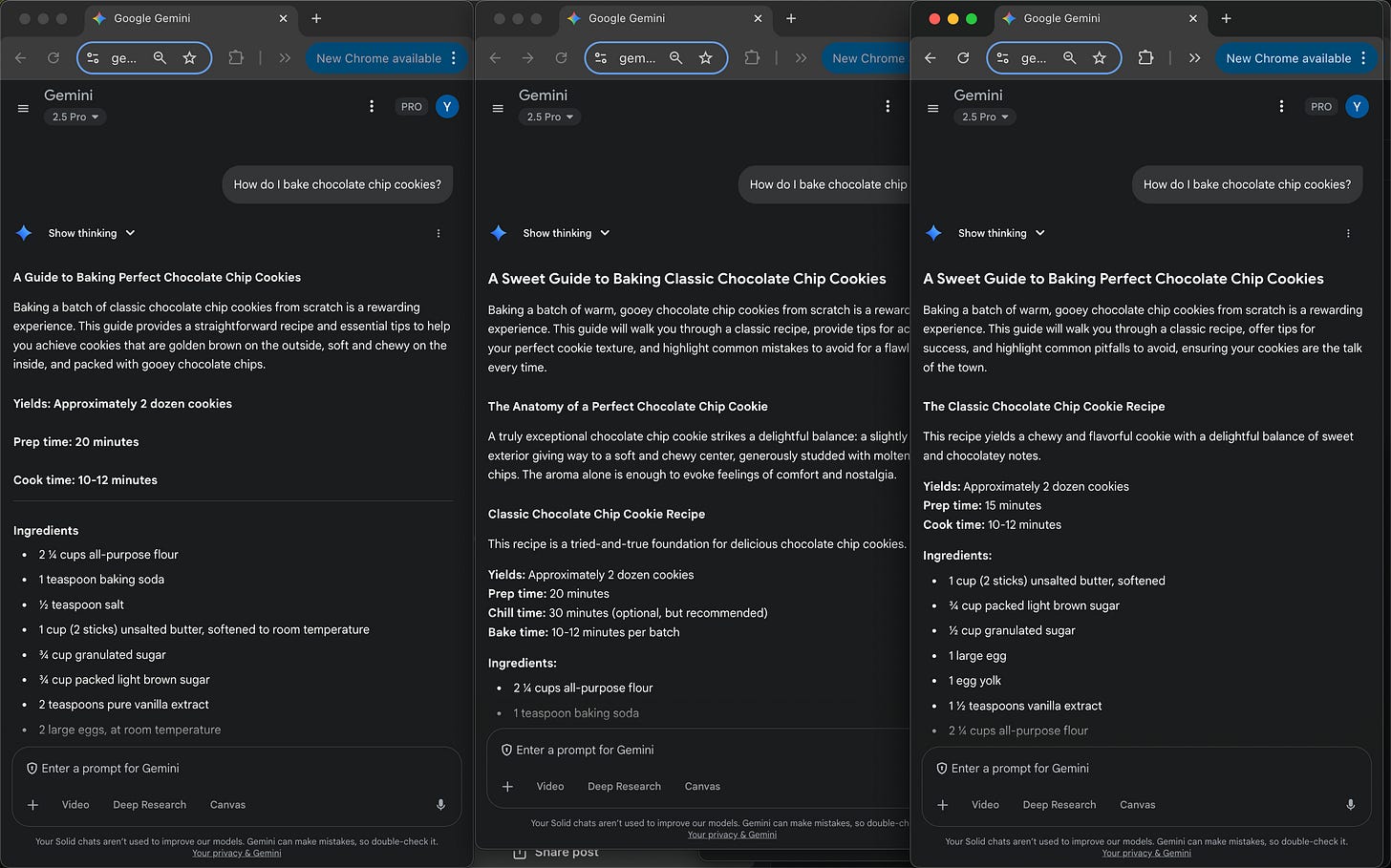

It’s Saturday afternoon, and we want to make chocolate chip cookies at the Leitersdorf home in Danville, California. Naturally, we ask AI for a recipe.

Is the prep time 15 minutes, or 20 minutes? 1 large egg and 1 yolk, or 2 large eggs? Half a cup of sugar or three-quarters? Those are minor differences, though right? I’m pretty sure the Leitersdorf kids would be happy with any of the options presented here. How to make chocolate chip cookies isn’t a major life decision after all.

But what happens if your AI application is meant to support an important business decision or process? There, minute details can matter tremendously, right? You expect the application to provide a consistent answer to a given question. But should you?

The double standard between humans and AI

Try a little experiment - go to three different people on your data engineering team and ask them exactly the same question (make sure they don’t discuss the question between themselves, no colluding!). Not a simple question like “what warehouse do we use?”. Rather, something like “I want to find all the users who are in accounts that have features X, Y, Z enabled and how long they’ve been using our system”.

You will get three different answers. Possibly, three very different answers. Which one is right?

Maybe the data engineer who has the most experience in the team is the right one?

Maybe the engineer who has been working on related tables recently?

Maybe non of them has the definite, correct, answer - and actually an analyst on another team knows (because they dealt with that question just last week)?

Today, we all accept this as part of the “mess” in the organization; there is more than one way to answer a given question. It frustrates us, because this inconsistency in the way a question gets answered in the data creates many arguments in meetings (potentially derailing them entirely).

Still - it’s a part of our reality, because there really is more than one way to answer a given question unfortunately.

So why is it, that when we ask an AI system a question like: “Are suppliers complying with our target time to delivery days?”, we expect the same answer every time we ask it?

Poor AI, it’s held to a higher bar than humans.

How do we deal with inconsistency in GenAI then?

First of all - we need to understand the beast. GenAI has a ton of potential, it will only get smarter. It’s already scoring high on math, legal and computer software exercises. It’s already solving problems we couldn’t even begin to deal with before. At the same time, GenAI is made to respond using a distribution. Instead of providing the same answer every time, it will provide one answer X% of the time, another answer Y%, and a third answer Z%. It’s wired to behave in this way.

So we need to get used to it, and embrace it.

Secondly, we need to help it be more consistent. For example, we can teach it to ask clarification questions before it gets started on a task. If the user asks “Are suppliers complying with our target time to delivery days?”, the AI can first ask for clarification like “What suppliers are you looking into? All of them or only certain ones?” or “Are you looking into all delivery methods or just specific ones”?

Or, we can teach it preferences - like “We prefer for you to pick tables from SCHEMA_A over SCHEMA_B for questions of type X, Y, Z.”

We can over course play with the parameters provided to the model itself - like the temperature parameter. Though that only works so far, because too high a temperature results in poor responses, and also doesn’t hold from week to week as new model versions come out.

You can even add a caching layer, so that each time somebody asks “Are suppliers complying with our target time to delivery days?” they will get the same answer. But what if someone asks “Are suppliers in North America complying with our target time to delivery days?”? Is that the same question, or not?

It’s hard

Honestly, as we’re building an application with GenAI in its core, we’re grappling with this constantly. Should we try and force our AI to be highly consistent, or can we give it some room for creativity? Is there more than one way to “skin a cat”?

If the AI provides a response that aligns with data engineer #1, but not with data engineer #2, how should the application handle the situation?

Can AI learn from one person’s feedback? If one person clicked a “thumbs down” on a response and explained why, is that person right? Or should we now hold a vote across their peers?

Those are just some of the hard questions we deal with every day at Solid. If you’re interested in how we do it, as well as helping us think through it, reach out.

P.S.

No cats, nor children, were harmed in the production of this post.